Highlights

-

Cyber criminals are using Facebook to impersonate popular generative AI brands, including ChatGPT, Google Bard, Midjourney and Jasper

-

Facebook users are being tricked into downloading content from the fake brand pages and ads

-

These downloads contain malicious malware, which steals their online passwords (banking, social media, gaming, etc), crypto wallets and any information saved in their browser

-

Unsuspecting users are liking and commenting on fake posts, thereby spreading them to their own social networks

Cyber criminals continue to try new ways to steal private information. A new scam uncovered by Check Point Research (CPR) uses Facebook to scam unsuspecting people out of their passwords and private data by taking advantage of their interest in popular generative AI applications.

First, the criminals create fake Facebook pages or groups for a popular brand, including engaging content. The unsuspecting person comments or likes the content, thereby ensuring it shows up on the feeds of their friends. The fake page offers a new service or special content via a link. But when the user clicks on the link, they unknowingy download malicious malware, designed to steal their online passwords, crypto wallets and other information saved in their browser.

Many of the fake pages offer tips, news and enhanced versions of AI services Google Bard or ChatGPT:

The above is just a sample of a few posts. There are many versions from Bard New, Bard Chat, GPT-5, G-Bard AI and others. Some posts and groups also try to take advantage of the popularity of other AI services such as Midjourney:

In many cases, cyber criminals also lure users to other AI services and tools. Another large AI brand , with over 2 million fans, that is impersonated by cybercriminals is Jasper AI. This too shows how small details can play an important role and mean the difference between a legitimate service and a scam.

Users often have no idea that these are scams. In fact, they are passionately discussing the role of AI in the comments and liking/sharing the posts, which spreads their reach even further.

Most of those Facebook pages lead to similar type landing pages which encourage users to download password protected archive files that are allegedly related to generative AI engines:

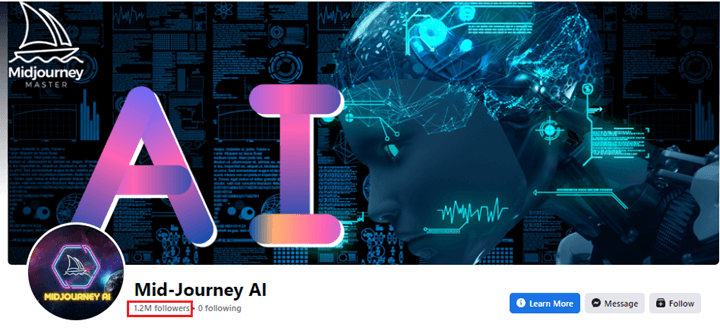

Case Study: Midjourney AI fake page

The threat actors behind certain malicious Facebook pages go to great lengths to ensure they appear authentic, bolstering the apparent social credibility. When an unsuspecting user searches for ‘Midjourney AI’ on Facebook and encounters a page with 1.2 million followers, they are likely to believe it is an authentic page.

The same principle applies to other indicators of page legitimacy: when posts on the fake page have numerous likes and comments, it indicates that other users have already interacted positively with the content, reducing the likelihood of suspicion.

The primary objective of this fake Mid-Journey AI Facebook page is to trick users into downloading malware. To lend an air of credibility, the links to malicious websites are mixed with links to legitimate Midjourney reviews or social networks:

The first link, ai-midjourney[.]net, has only one button Get Started:

This button eventually redirects to the second fake site, midjourneys[.]info, offering to download Midjourney AI Free for 30 days. When the user clicks the button, they actually download an archive file called MidJourneyAI.rar from Gofile, a free file sharing and storage platform.

Once the download finishes, the victim who expects they downloaded the legitimate MidJourney installer, is deceived into running a malicious file named Mid-Journey_Setup.exe.

This fake setup file delivers Doenerium, an open-source infostealer, which was observed in multiple other scams, with an ultimate goal to harvest victims’ personal data.

The malware stores itself and all its multiple auxiliary files and directories in the TEMP folder:

The malware uses multiple legitimate services such as Github, Gofile and Discord as a means of command and control communication and data exfiltration. Thus, the github account antivirusevasion69 is used by the malware to deliver Discord webhook, which is then used to report to all the information stolen from the victim to the actor’s Discord channel.

First, the malware dispatches a “New victim” message to Discord, providing a description of the newly infected machine. The description includes details such as the PC name, OS version, RAM, uptime, and the specific path from which the malware was executed. This information allows the actor to discern precisely which scam or lure led to the installation of the malware.

The malware makes efforts to gather various types of information from all the major browsers, including cookies, bookmarks, browsing history, and passwords. Additionally, it targets cryptocurrency wallets including Zcash, Bitcoin, Ethereum, and others. Furthermore, the malware steals FTP credentials from Filezilla and sessions from various social and gaming platforms.

Once all the data is stolen from the targeted machine, it is consolidated into a single archive and uploaded to the file-sharing platform Gofile:

Subsequently, the infostealer sends an “Infected” message to Discord, containing organized details about the data it successfully extracted from the machine, along with a link to access the archive containing the stolen information.

It is interesting to mention, that most of the comments on the fake Facebook page are made by bots with Vietnamese names, and the default chat language on a fake MidJourney site is Vietnamese. This allows us with low – medium confidence to assess that this campaign is run by a Vietnamese-affiliated threat actor.

Following are examples of replies to one of posts in the page :

The Rise of Infostealers

Most of the campaigns using fake pages and malicious ads in Facebook eventually deliver some kind of information stealing malware. In the past month, CPR and other security companies observed multiple campaigns that distribute malicious browser extensions aimed at stealing information. Their main target appears to be data associated with Facebook accounts and the theft of Facebook pages. It seems the cyber criminals are trying to abuse existing large audience pages, including advertising budgets, so even many pages with a large reach could be exploited in this way to further spread the scam.

Another campaign exploiting the popularity of AI tools uses a “GoogleAI“ lure to deceive users into downloading the malicious archives, which contain malware in a single batch file, such as GoogleAI.bat. Similarly to many other attacks like this, it uses open-source code-sharing platform, this time Gitlab, to retrieve the next stage:

The final payload is located in python script called libb1.py. This is a python-based browser stealer which attempts to steal login data and cookies from all of the major browsers, and the stolen data is exfiltrated via Telegram:

The previously described campaigns extensively rely on various free services and social networks, as well as open-source toolset, lacking significant sophistication. However, not all campaigns follow this pattern. Check Point Research has recently uncovered many sophisticated campaigns that employ Facebook ads and compromised accounts disguised, among other things, as AI tools. These advanced campaigns introduce a new, stealthy stealer-bot BundleBot that operates under the radar. The malware abuses the dotnet bundle (single-file), self-contained format that results in very low or no static detection at all. BundleBot is focused on stealing Facebook account information, rendering these campaigns self-sustaining or self-feeding: the stolen data migh subsequently utilized to propagate the malware through newly compromised accounts.

Conclusion

The increasing public interest in AI-based solutions has led threat actors to exploit this trend, particularly those distributing infostealers. This surge can be attributed to the expanding underground markets, where initial access brokers specialize in acquiring and selling access or credentials to compromised systems. Additionally, the growing value of data used for targeted attacks such as business email compromise and spear-phishing, has fueled the proliferation of infostealers.

Unfortunately, authentic AI services make it possible for cyber criminals to create and deploy fraudulent scams in a much more sophisticated and believable way. Therefore, it is essential for individuals and organizations to educate themselves, be aware of the risks and stay vigilant against the tactics of cyber criminals. Advanced security solutions remain important in protecting against these evolving threats.

How to Identify Phishing and Impersonation

Phishing attacks use trickery to convince the target that they are legitimate. Some of the ways to detect a phishing attack is to:

- Ignore Display Names: Phishing sites or emails can be configured to show anything in the display name. Instead of looking at the display name, check the sender’s email or web address to verify that it comes from a trusted and authentic source.

- Verify the Domain: Phishers will commonly use domains with minor misspellings or that seem plausible. For example, company.com may be replaced with cormpany.com or an email may be from company-service.com. Look for these misspellings, they are a good indicators.

- Always download software from trusted sources: Facebook groups are not the source from which to download software to your computer. Go directly to a trusted source, use its official webpage. Do not click on downloads coming from groups, unofficial forums etc.

- Check the Links: URL phishing attacks are designed to trick recipients into clicking on a malicious link. Hover over the links within an email and see if they actually go where they claim. Enter suspicious links into a phishing verification tool like phishtank.com, which will tell you if they are known phishing links. If possible, don’t click on a link at all; visit the company’s site directly and navigate to the indicated page.