Check Point Software Prevents Potential ChatGPT and Bard data breaches

Preventing leakage of sensitive and confidential data when using Generative AI apps

Like all new technologies, ChatGPT, Google Bard, Microsoft Bing Chat, and other Generative AI services come with classic trade-offs, including innovation and productivity gains vs. data security and safety. Fortunately, there are simple measures that organizations can take to immediately protect themselves against inadvertent leak of confidential or sensitive information by employees using these new apps.

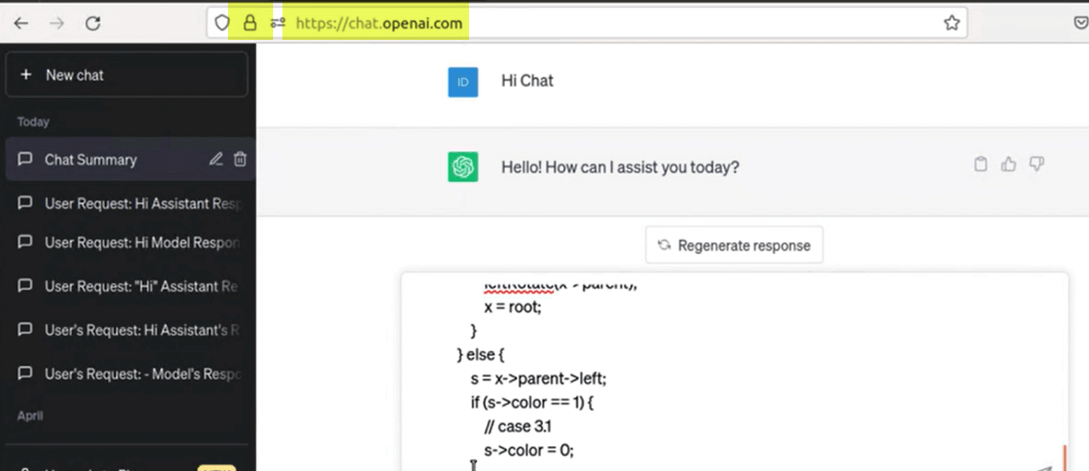

The use of Large Language Models (LLMs) like ChatGPT has become very popular among employees, for example when looking for quick guidance or help with software code development.

Unfortunately, these web scale ‘productivity’ tools are also perfectly set up to become the single largest source of accidental leaks of confidential business data in history – by an order of magnitude. The use of tools like ChatGPT or Bard make it incredibly easy to inadvertently submit confidential or privacy-protected information as part of their queries.

How Check Point Secures Organizations who use services like ChatGPT / Google Bard /Microsoft Bing Chat

Many AI services state that they do not cache submitted information or use uploaded data to train their AI engines. But there have been multiple incidents specifically with ChatGPT that have exposed vulnerabilities in the system. The ChatGPT team recently fixed a key vulnerability that exposed personal information including credit card details, and chat messages.

Check Point Software, a leading global security vendor, provides specific ChatGPT/Bard security flags and filters to block confidential or protected data from being uploaded via these AI cloud services.

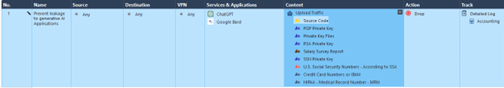

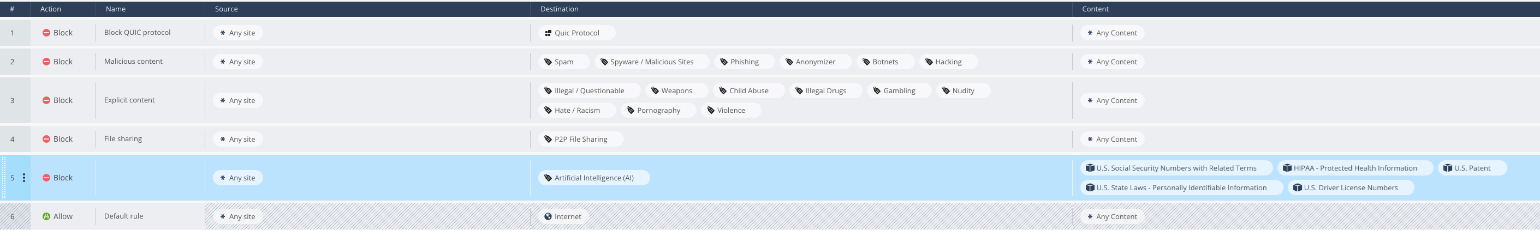

Check Point empowers organizations to prevent the misuse use of Generative AI by using Application Control and URL Filtering to identify and block access to block the use of these apps, and Data Loss Prevention (DLP) to prevent inadvertent data leakage while using these apps.

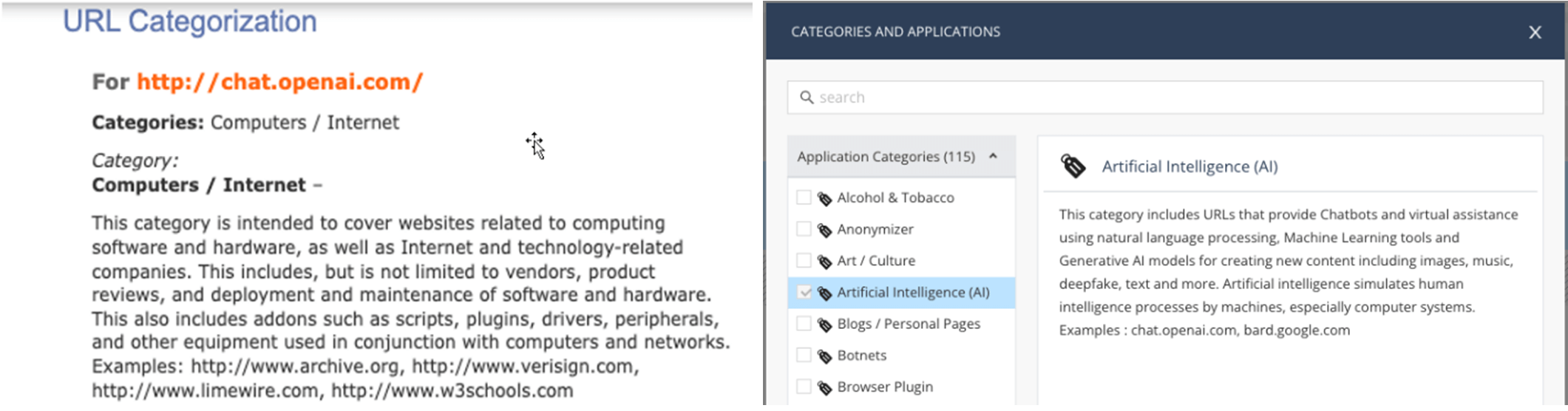

New AI Category Added

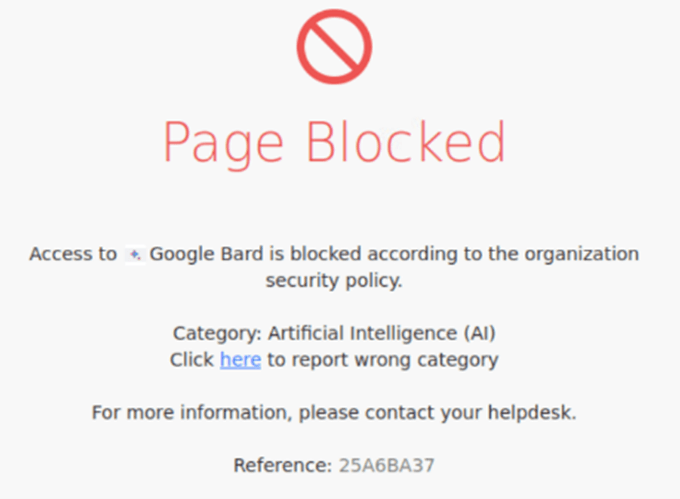

Check Point provides URL filtering for Generative AI identification with a new AI category added to its suite of traffic management controls (see images below). This allows organizations to block the unauthorized use of non-approved Generative AI apps, through granular secure web access control for on-premises, cloud, endpoints, mobile devices, and Firewall-as-as Service users.

In addition, Check Point’s firewalls and Firewall-as-a-Service also include data loss prevention (DLP). This enables network security admins to block the upload of specific data types (software code, PII, confidential info, etc.) when using Generative AI apps like ChatGPT and Bard.

Check Point customers can turn on fine-grain or broad security measures to protect against the misuse of ChatGPT, Bard, or Bing Chat. Configuration is done quickly with a few clicks through Check Point’s unified security policy (see images below).

If organizations don’t have effective security in place for these types of AI apps and services, their private data could become an inherent part of large language models and their query responses – in turn making confidential or proprietary data potentially available to an unlimited number of users or bad actors.

Protecting against Data Loss

Because of the explosive popularity of these services, organizations are looking for the best ways to secure their data by either blocking access to these apps or enabling safe use of Generative AI services. An important first step is to educate employees about using basic security hygiene and common sense to their queries. Don’t submit software code or any information that your employer would not want shared publicly. The minute the data leaves your laptop or mobile phone, it is no longer in your control.

Another very common but honest mistake is when employees upload a work file to their personal Gmail account so they can finish their work from home on their family’s Mac or PC. Employees also unwittingly post documents to their team members’ personal Google accounts. Unfortunately, by sharing access to documents with non-organizational/corporate accounts, employees are inadvertently making it a lot easier for hackers to access their company’s confidential data.

Check Point Research Analyzes the Use of Generative AI to Create New Cyber Attacks

Since the commercial launch of Open AI’s ChatGPT at the end of 2022, Check Point Research (CPR) has continuously analysed the safety and security of popular generative AI tools. Meanwhile, the generative AI industry has focused on promoting its revolutionary benefits while downplaying security risks to organizations and IT systems. Check Point Research (CPR) analysis of ChatGPT4 has highlighted potential scenarios for acceleration of cyber-crimes. CPR has delivered reports on how AI models can be used to create a full infection flow, from spear-phishing to running a reverse shell code, backdoors, and even encryption codes for ransomware attacks. The rapid evolution of orchestrated and automated attack methods poses serious concerns about the ability of organizations to secure their key assets against highly evasive and damaging attacks. While OpenAI has implemented some security measures to prevent malicious content from being generated on its platform, CPR has already presented how cyber criminals are working their way around such restrictions.

Check Point continues to stay on top of the latest developments in Generative AI technology including ChatGPT4 and Google Bard. This research plays a key part in determining the most effective mechanisms for preventing AI-driven attack techniques.

Learn more about relevant Check Point solutions:

- Quantum Firewalls/Gateways

Harmony Connect (SASE/Firewall as a Service) - CyberTalk.org blog: 5 ways ChatGPT and LLMs can advance cyber security

- Discuss ChatGPT Security on CheckMates Community (Check Point Users)

- AI for Cyber Security

- GUI: Setting security rules for Generative AI applications (ChatGPT, Google Bard)

- View Short Video for Check Point Firewall Config Steps

Simple Firewall Policy Management with New AI Category for URL Filtering

![]()

Control Access to Generative AI with URL Categorization

Example of a “Page Blocked” Alert

Firewall: Data Loss Prevention (DLP) Policy Rule for Restricted Use of AI

Rule for Blocking a specific service (example: Bing Chat)

![]()

Firewall-as-a-Service (SSE with Check Point Harmony Connect)

Rule #5 below restricts type of content that can be uploaded to Generative AI services

Learn About Data Loss Prevention

Sign-up for a Security Risk Assessment